Build A Better Blog With Quality Content

Befriend The Panda – Create Quality Content

Many legal blogs have seen a decline in impressions where these types sites that have more dynamic blog posts than static practice area pages. Often the quality of posts are of lower quality than the more focused legal static pages. Blog posts suffer from low quality content, citing pop or legal news with recent celebrity divorces, or local traffic and police information like car accidents or recent arrests frequently resulting in duplication of content across many online properties.

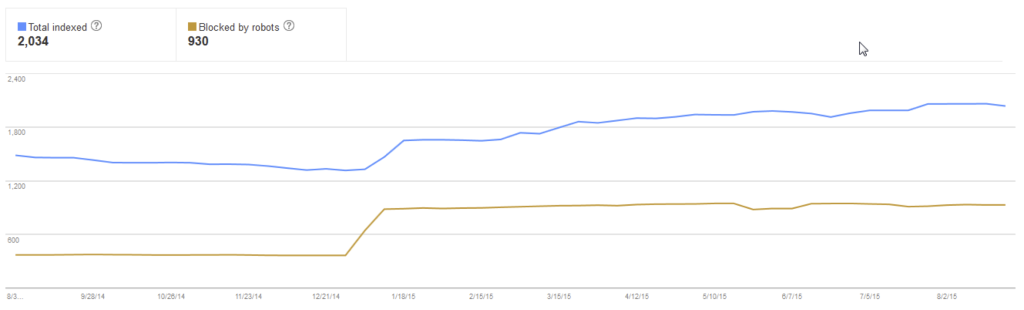

Panda quality guidelines frown on these types of low quality posts. The lower quality content also trips the quality algorithm filters with poor engagement signals, high bounce rates and little time on site. For example a high profile New York law firm has this type of site profile:

- 1987 internal URLS

- 81.7% have a 100% bounce rate

- 65% of the bounce comes from blog pages

- 25% of the blog posts have a 0% CTR

Not that there’s anything wrong with an 81% bounce rate or anything if you don’t mind being excluded from search results. Recently, Google is simply de-listing hundreds of these posts from search results. Early adopters of blogs that generate multiple blogs posts per week of suspect quality are at risk of getting caught in these ever strengthening quality filters. These blogs are definitely sending the wrong signals to the search engines.

Be Friends With Bots!

Add into this mix the bad SEO tactic of robots.txt blocking the tags. It is not uncommon to block tags via robots.txt, but doing so creates a very negative search engine problem. This tactic is deployed to keep bots from crawling tags to lighten server loads, but creates a negative SEO environment because having links on pages that people can see, but forbidding the search bots access to the same data sends a signal to the SERPs that something dark and mysterious is happening behind closed doors. Search engines reward transparency and dislike “hidden” content. Blog tags are used throughout sites in various ways that can include:

- Cloud tags

- Category tags

- Tag bar on side navigation

- Tags within each post

- Tags archives page

- Tags on month/year archives

- Tags within schema code

When the blocking is excessive, it appears to a search bot that a “dark web” is being created behind the wall of robots.txt blocking. Again, not a good way to signal quality to the search engines.

How To Fix A Bad Blog With Grassroots SEO

1. Don’t block the bots. Transparency for most bots is a good thing. Sure some folders may need security, but it’s best to let your blog and site be crawled. You can control what is and what is not seen easily with a robots.txt file. An example for WordPress might be something like this:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/cache/

Disallow: /wp-content/themes/

Disallow: /trackback/

Disallow: */trackback/

Disallow: /*?*

Disallow: /*?

Disallow: /*~*

Disallow: /*~

Disallow: /wp-*

Disallow: /comments/feed/

User-agent: Mediapartners-Google*

Allow: /

User-agent: Googlebot-Image

Allow: /wp-content/uploads/

User-agent: Adsbot-Google

Allow: /

User-agent: Googlebot-Mobile

Allow: /

Sitemap: http://domainname.com/post-sitemap.xml

Another robots.txt example might be:

User-agent: *

#Global Rules – START

Disallow: /content

Disallow: /data

Disallow: /modules

Disallow: /Disclaimer.shtml

Disallow: /E-mail.shtml

Disallow: /Error.shtml

Disallow: /Error-Espanol.shtml

Disallow: /Gracias.shtml

Disallow: /Thank-You.shtml

Disallow: /mt-bin/

Allow: /content/images

Allow: /content/css

#Global Rules – END

#Site Specific Rules – START

Sitemap: http://www.domainname.com/sitemap.xml.gz

#Site Specific Rules – END

2. Promote quality content. Start by classifying the blog posts. One quick way to process this assessment is to start by running an analysis of your content by highest bounce rate and shortest time on page. These are often the most troubled content pages and posts. Review them, are they good and no action needed at this time, or are they poor and need to be removed? If they are questionable, can they be corrected?

Sometimes its as simple as combining several short similar topics into one or two longer higher quality pieces of content. Another option to improve the content quality might be to rewrite the first and last paragraph and add semantic phrases into the header tags (H1, H2). This refined and refocused content can provide a boost to overall site quality.

3. Promote socially. As the content improvements are made, send a fresh set of eyes there with links from social posts when appropriate. This is a nice way to send new signals about content quality improvements.

By paying some attention to on page analytics, it’s not all that hard to monitor your SEO efforts. A small focus on content quality will bring big benefits for both your audience and your website metrics. Make sure that you include content KPIs in your grassroots SEO strategy, it will reward your brand over the long term.

A seasoned digital marketing professional with over 20 years of expertise in digital marketing, search engine optimization, search engine marketing, brand development, conversion optimization, lead generation, web development and data analytics. He is a strategic digital marketing thought leader in a multitude of business verticals including automotive, education, financial services, legal marketing and professional services.